Datacenter Utilization Boosted

Jim Ballingall, Executive Director, Industry-Academia Partnership

1/28/2014 08:20 AM EST

eetimes.com

Most cloud computing facilities operate at low utilization, often under 10%, hurting both cost effectiveness and the ability to scale these large datacenters. With many datacenters consuming tens of megawatts, adding additional servers can push the limits of the local electrical plant to deliver power.

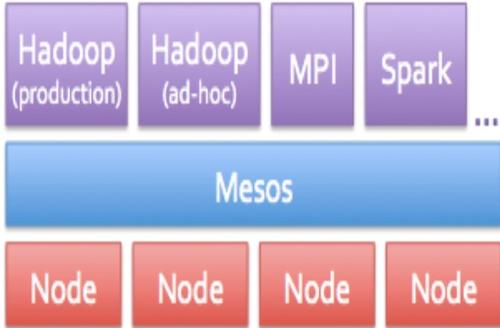

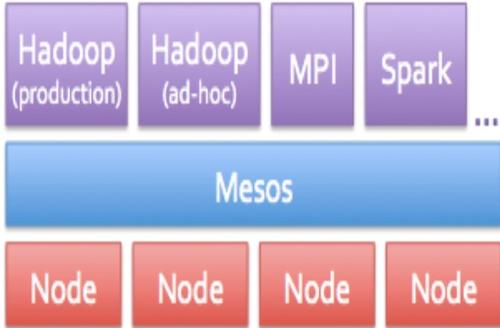

Some datacenter users have turned to open-source cluster management frameworks such as Mesos and Yarn to improve resource utilization. These tools let diverse frameworks and workloads run on a single cluster as opposed to dedicating a cluster to a single workload.

Mesos and Yarn act as a layer of abstraction between applications and pools of servers, using Linux containers to isolate frameworks. They eliminate the need for a hypervisor, removing the associated virtualization overhead and moving the application closer to the hardware (see below).

The Mesos framework, originally developed at UC Berkeley in 2009, recently became a Top Level Project in Apache. Twitter and AirBNB are prominent developers and users of Mesos.

UC Berkeley's AmpLab developed Mesos in 2009.

But even with these tools, utilization still typically runs below 20%. Responding to this problem, researchers Christina Delimitrou and Professor Christos Kozyrakis from Stanford presented innovative work at a recent IAP-Stanford Cloud workshop.

Delimitrou and Kozyrakis studied utilization for a production cluster at Twitter with thousands of servers managed by Mesos over a one-month period. The cluster mostly hosts user-facing services and has an aggregate CPU utilization consistently below 20%, even though reservations by users for server usage reached up to 80% of total capacity.

The problem was that while about 20% of users were underestimating their workload resource needs, in aggregate the users were overestimating their resource reservations by three- to five-fold. Delimitrou and Kozyrakis described an automated cluster management system they call Quasar that addresses this issue, providing a quality-of-service-aware management system to support Mesos and other frameworks.

Quasar increases resource utilization and, at the same time, provides high application performance. It does this work in four stages.

First, Quasar does not rely on resource reservations by the users, which led to the under-utilization the Stanford researchers observed. Instead, users express performance requirements for their workloads. Second, it quickly and accurately determines the impact of the amount of resources, the type of resources, and interference on performance for each workload and dataset.

Third, Quasar jointly performs resource allocation and assignment -- since these are interdependent -- evaluating options for an efficient way to pack workloads on available resources. Finally, it continually monitors workload performance and adjusts resource allocation and assignment when needed.

Stanford implemented a prototype for Quasar that runs on Linux and OS X and currently supports applications written in C/C++, Java, and Python. The API includes functions to express performance constraints and the types of submitted workloads. It also can check the status of a job, revoke it, or update system constraints.

Delimitrou and Kozyrakis evaluated Quasar over a wide range of workload scenarios, including combinations of distributed analytics frameworks and low-latency, stateful services, both on a local cluster and a cluster of dedicated Amazon EC2 servers. In tests, Quasar managed analytics frameworks including Hadoop, Storm, Spark, latency-critical services such as NoSQL workloads, and conventional single-node workloads.

There was no need to modify any applications or frameworks, and the framework-specific code in Quasar is small, between 100 and 600 lines of code depending on the framework. The results are dramatic: Quasar makes a 47% improvement in utilization. Allocated resources in the 200-server EC2 cluster were nearly identical to the resources used while meeting the performance constraints for workloads of all types.

In the future, Delimitrou and Kozyrakis plan to merge the Quasar classification and scheduling algorithms into cluster management frameworks like OpenStack and Mesos. The full paper is available online and will be presented at ASPLOS 2014 in March. |