Supercomputing Center love InfiniBand in the end is what the hell?

news.watchstor.com

We often hear about what a super computing center and what advanced technology, and InfiniBand technology is the most talked about topic. So the question is: Where is InfiniBand technology sacred?

Simply put, InfiniBand is a multi-concurrency-enabled conversion cable technology that can handle storage I / O, network I / O, and interprocess communication (IPC) so that disk arrays, SANs, LANs, servers, and cluster servers, as well as external networks such as WAN, VPN, and the Internet.

InfiniBand is designed primarily for use in enterprise data centers, large or very large data centers, primarily for high reliability, availability, scalability, and high performance. InfiniBand can provide high-bandwidth, low-latency transmissions over a relatively short distance, and supports redundant I / O channels in single or multiple internetworks, thereby keeping the data center running in the event of a local failure.

InfiniBand provides superior transmission performance for the Supercomputing Center

In view of this, more and more supercomputing centers began to use InfiniBand technology, especially in the transmission performance requirements of very high data center, the application is very extensive. Recently, Mr. Gilad Shainer, Vice President of Marketing, Mellanox, a leading provider of InfiniBand solutions, spoke in detail about the market competitiveness of InfiniBand solutions and the new performance and scalability provided to global users.

Mr. Gilad Shainer, Vice President of Marketing, Mellanox

Gilad Shainer mentioned that in today's super-TOP500 ranking, 194 systems using Mellanox network products, the data accounted for about 39%. This shows, InfiniBand solution still holds the absolute market share in the field of high-performance computing, it is far higher than other network interconnection manufacturers inside high-performance computing system.

At the same time, it should be noted that, InfiniBand in a pure high-performance computing clusters still occupy an absolute leader in the most petascale petascale system, Mellanox's InfiniBand solution accounted for nearly half Market share, up to 46% of the share, which fully demonstrated in the high-performance computing systems such as Mellanox's network is still an absolute leading high-speed network.

In 2016 TOP500 inside the new system, there are 65 selected Mellanox products, select Mellanox products, the number of clusters is far greater than the number of competitors selected. The number of Mellanox products is four times that of the competitor's Omni-path (Intel) network and five times that of the competitor's Cray system.

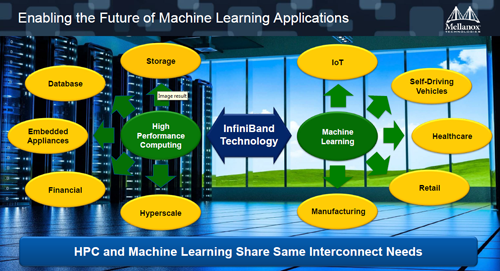

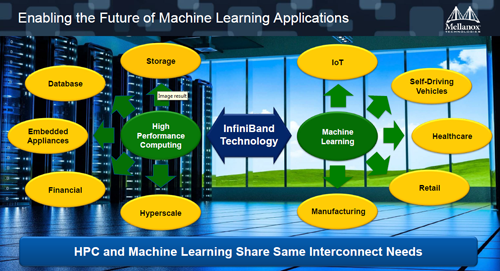

It is noteworthy that, now Mellanox network has begun to expand to a number of areas, in particular, is worth mentioning that the recent very fire is the field of machine learning, artificial intelligence. Mellanox found that in these emerging areas, their analysis of large amounts of data, read the characteristics of very similar to high-performance computing. So Mellanox network through machine learning and artificial intelligence, rapid extension to the Internet of Things, automatic driving, as well as health, manufacturing, retail and many other industries.

Mellanox led the data center revolution

In addition to providing InfiniBand solutions, Mellanox also provides Ethernet. In terms of Ethernet, Mellanox also has a very good innovation, which interconnects the top 500 of all the 4 10 Gigabit Ethernet system, but also interconnect the world's first, the first 100G Ethernet system.

Mellanox's innovation is evident in the industry, according to Gilad Shainer introduction, now Mellanox main concern is how to lead the data center architecture changes, this will have a great impact on traditional data centers.

Mellanox found that traditional data centers are CPU core data center computing model, all the data to be moved to the CPU before they can be calculated. Then when more data movement will make the whole application has a huge bottleneck. Because they need to wait for data transmission to be completed, which will result in a wait for each other, which seriously affect the data center processing speed of the phenomenon.

Mellanox believes that now the focus of the traditional CPU core data center architecture, is moving toward a data center for a new data center architecture evolution. The data-centric new data center architecture should be: where the data, where the data analysis.

So in order to efficiently analyze the data, you need to transfer computing power to the data, rather than moving the data to the calculation.

Do not analyze the data to the CPU after the data flow in any place, it can be in the network which for analysis. Such as from the "CPU as the core" to "data core" This data as the center of the new data architecture lecturer future development trend.

Mellanox proposed the concept of Co-design, which is based on a task-offloading architecture that allows technologies such as RDMA (Direct Memory Access) to be made available, and that traditional data center loading (Onloading) architecture can not do To the. And the use of intelligent interconnection and unloading technology, allows high-performance computing systems continue to expand.

Mellanox Asia Pacific and China, senior director of market development Liu Tong

"In order to better establish a platform for close technical exchange with Chinese customers, Mellanox will build Mellanox Centers of Excellence in China with a number of key R & D-focused customers," said Liu Tong, Senior Director of Market Development for Mellanox Asia Pacific and China. The center is based on the Co-Design concept, which delivers the ultimate in HPC performance. "

The Mellanox solution makes the connection smarter

Mellanox continues to develop intelligent networks to help the network handle data, and in November this year, Mellanox announced the world's first 200Gb / s data center network interconnection solution. Mellanox ConnectX-6 adapters, Quantum switches, LinkX cables and transceivers form a complete 200Gb / s HDR InfiniBand network interconnect infrastructure for next-generation high-performance computing, machine learning, large data, cloud, web 2.0, and storage platforms .

The 200Gb / s HDR InfiniBand solution further strengthens Mellanox's market leadership while enabling customers and users to take advantage of open standards-based technologies to maximize application performance and scalability while dramatically reducing data center Total Cost of Ownership. The Mellanox 200Gb / s HDR solution will be mass-produced in 2017.

The Mellanox 200Gb / s HDR InfiniBand Quantum switch is the world's fastest switch supporting 40-port 200Gb / s InfiniBand or 80-port 100Gb / s InfiniBand connectivity for 16Tb / s total switching capacity with extremely low latency, There are 90ns (nanoseconds). Mellanox Quantum switches feature the most scalable switching chips on the market, providing an optimized and flexible routing engine with support for in-network computing.

In order to build a complete end-to-end 200Gb / s InfiniBand infrastructure, Mellanox will also release the latest LinkX solutions to provide users with a range of different lengths of 200Gb / s copper and silicon optical cable.

Today, as we move into a data-centric era, Mellanox, the provider of high-performance end-to-end interconnect solutions, will need to take on more tasks to guide the industry. Mellanox is now working with a number of partners in China to develop solutions that are open architecture solutions tailored to the needs of different industries and to help domestic customers break through the inherent hardware bottlenecks and promote the use of network innovation in China.

WatchStor Zhao Weimin Shu 2016-12-30 |