Dip Transform for 3D Shape Reconstruction Kfir Aberman1,2 Oren Katzir1,2 Qiang Zhou3 Zegang Luo3 Andrei Sharf1,5 Chen Greif4 Kfir Aberman1,2 Oren Katzir1,2 Qiang Zhou3 Zegang Luo3 Andrei Sharf1,5 Chen Greif4

Baoquan Chen*3 Daniel Cohen-Or2

1 AICFVE,Beijing Film Academy 2 Tel-Aviv University 3 Shandong University 4 University of British Columbia

5 Ben-Gurion University of the NEGEV

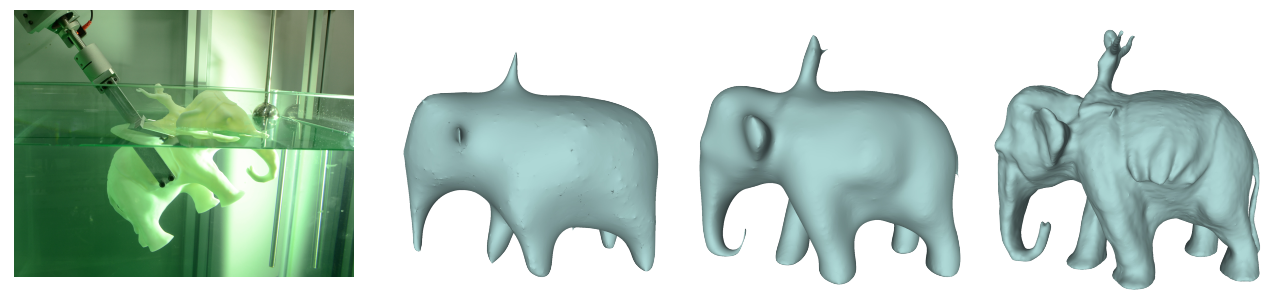

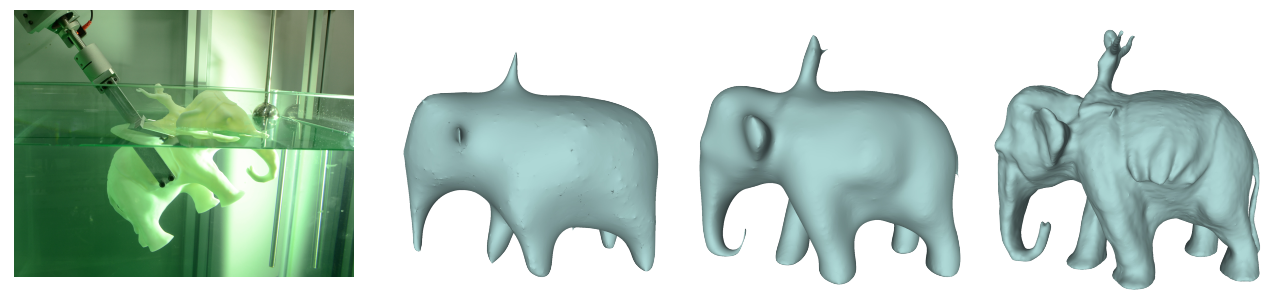

3D scanning using a dip scanner. The object is dipped using a robot arm in a bath of water (left), acquiring a dip transform. The quality of the reconstruction is improving as the number of dipping orientations is increased (from left to right) [*Corresponding author]

Abstract

The paper presents a novel three-dimensional shape acquisition and reconstruction method based on the well-known Archimedes equality between fluid displacement and the submerged volume. By repeatedly dipping a shape in liquid in different orientations and measuring its volume displacement, we generate the dip transform: a novel volumetric shape representation that characterizes the object’s surface. The key feature of our method is that it employs fluid displacements as the shape sensor. Unlike optical sensors, the liquid has no line-of-sight requirements, it penetrates cavities and hidden parts of the object, as well as transparent and glossy materials, thus bypassing all visibility and optical limitations of conventional scanning devices. Our new scanning approach is implemented using a dipping robot arm and a bath of water, via which it measures the water elevation. We show results of reconstructing complex 3D shapes and evaluate the quality of the reconstruction with respect to the number of dips.

Video

More results

3D dip reconstructions comparison. (a) Picture of the objects during the dipping (b) Profile picture of the printed objects (c) Structured light scanner reconstruction (d) Our 3D reconstruction using the dipping robot. Occluded parts of the body have no line-of-sight to the scanner sensor, while the dipping robot, using water, is able to reconstruct these hidden parts.

Downloads

Acknowledgement

We thank the anonymous reviewers for their helpful comments.Thanks also go to Haisen Zhao and Hao Li,Huayong Xu, and the rest of the SDU lab-mates for their tremendous help on production.This project was supported in part by the Joint NSFC-ISF Research Program 61561146397, jointly funded by the National Natural Science Foundation of China and the Israel Science Foundation (No. 61561146397), the National Basic Research grant (973) (No. 2015CB352501). and the NSERC of Canada grant 261539. |