Great primer on NAND/DRAM markets and technologies. I am sure many of us are already aware of most of the points in the article, but there are some interesting comments toward the end.

Link: seekingalpha.com

Memory Market For Dummies

Aug. 23, 2018 2:30 PM ET

| Includes: HXSCF, INTC, MU, SSNLF, WDC

by: Zynath Investment

Zynath Investment

Value, event-driven, tech, biotech

Summary

In this article we look at current memory technology.

We also examine some of the promising future technologies like 3D XPoint and MRAM.

Finally, we look at the cyclical nature of the memory industry and discuss reasons why this dynamic is unlikely to continue.

Recently I did a presentation to a group of institutional investors about several opportunities in the memory industry. During the Q&A session following the presentation, it has become clear that many investors have a relatively foggy understanding of the specifics of the memory industry. I wrote the following as a result with the hope of providing the reader a bird's eye view of the computer memory industry:

Are you an investor with a position in Micron ( MU), Samsung ( OTC:SSNLF), SK Hynix ( OTC:HXSCF), Intel ( INTC), Western Digital ( WDC), or Toshiba ( OTCPK:TOSYY)? Do you often read industry press releases and articles about the state of the memory industry and are left more confused at the end of the article than at the beginning due to the complex terminology and/or statements therein? Well, you're in luck. In this article we are going to do something a little different. We are going to demystify some of the aspects of the memory industry which should help you better navigate other informative articles and presentations (such as our own) on the subject so let's get started.

Current Memory Technologies (Chart Courtesy of Zynath Group) (Chart Courtesy of Zynath Group)

Currently the two most common computer memory technologies in the market are DRAM and NAND flash. DRAM, which is short for dynamic random access memory, is the operating memory of your laptop or mobile device. Operating memory can be best thought of as short term memory. It is the memory your computer uses to "store" things it's currently working on. The reason we put "store" in quotes is this type of memory is volatile. By volatile what we mean is if the electrical supply to the DRAM module is interrupted it will instantly erase or "forget" everything that was stored on it.

By contrast, NAND memory is nonvolatile, aka, persistent. It derives its name from the type of logic gate, the NAND logic gate, that it uses to store information. So NAND memory is primarily used as storage for mobile devices, laptop computers and server applications. It's where all your cat pictures are stored! But if NAND memory is persistent why does anybody even bother with DRAM?

While NAND is persistent it has three significant drawbacks by comparison to DRAM. NAND is slower in both raw throughput and latency. And, it also has a limited lifespan. Let's take these one at a time.

When we talk about memory speed it is important to consider both raw throughput and latency. Raw throughput is probably the matrix you are most familiar with, it's the raw speed of the drive. So for instance, you may see a solid state drive (NYSE: SSD) advertise that it has a read and write speed of 512MB per second (Mb/s). What that means is that the drive is capable of sequentially writing or reading data at the speed advertised. However, how often do you actually sequentially read or write to the drive? In reality, you are only doing that when you are copying large files, such as large video files or photographs, or maybe loading assets for a video game. In all other instances, it is actually the second matrix that is far more important.

Latency defines how fast the drive responds after the CPU asks it for a particular piece of data. You can test this for yourself in practice. Take a folder that contains thousands of photographs and copy it in place on disk. And, then contrast the speed at which it can do that with the speed of copying a single large file. You will notice that your total Mb/s is significantly faster when you are copying a large file because the computer only has to initialize the copy procedure once. With the folder containing thousands of small files the computer has to initialize the copy procedure thousands of times thus slowing down the entire process. This was far more evident in the early years of NAND SSDs but even now with a large enough dataset you can notice a significant difference.

Finally, we get to the lifespan. NAND drives have a limited number of write cycles that they can perform on a single sector before that sector is deteriorated to the point it can no longer accept new writes. A good analogy for this is writing something down on a piece of paper with a pencil and then erasing it. Eventually, you rub a hole through the piece of paper and you need to move on to a different sheet to record new information.

To address this issue NAND drives perform something called a copy-on-write operation. Imagine our paper and pencil analogy from above, you write down somebody's phone number but you make a mistake, you use an eraser to erase the phone number and you rewrite it again but this time on a different spot of the paper. Why is this better? What this process does is it allows you to spread erase cycles over a larger portion of the NAND drive. In a computer system, there are some files that are updated very seldom such as system program files. On the other hand, there are other files such as system log files, which are written to over and over again hundreds of times per day. If the NAND controller did not practice copy-on-write it would pretty soon destroy the sector where that system log is stored.

Generally speaking NAND flash can handle close to a million writes to a particular sector before that sector is worn out. By contrast, DRAM is essentially, for all practical purposes, limitless in the number of read and write operations it can perform to a particular sector. This is why with DRAM you don't need to perform a copy-on-write operation and you can store data in the same place you read it from. However, DRAM can only store data for a very short period of time because the data is stored inside tiny little capacitors on the memory die. As those capacitors are discharged the data has to be refreshed. This process occurs many times a second to keep the data persistent, this is why as soon as the electrical current is cut off from a DRAM memory module it quickly forgets everything that is stored on it. Intuitively, you can think of it as trying to remember a list of unrelated items that somebody just told you. You can remember it by repeating the list in your mind over and over again, but as soon as you stop repeating it, it quickly flees your memory. That, in essence, is DRAM at work.

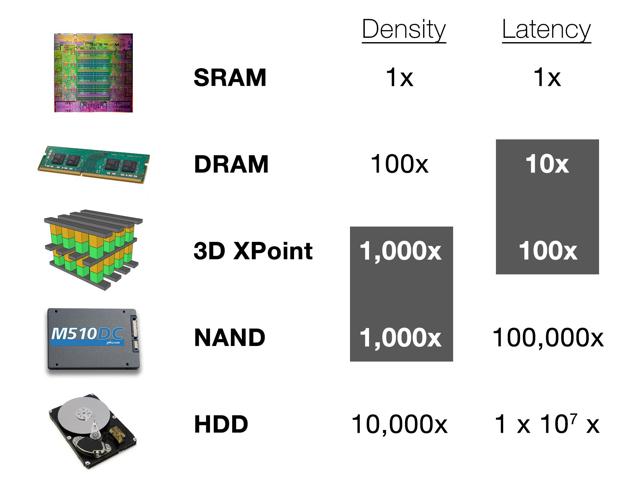

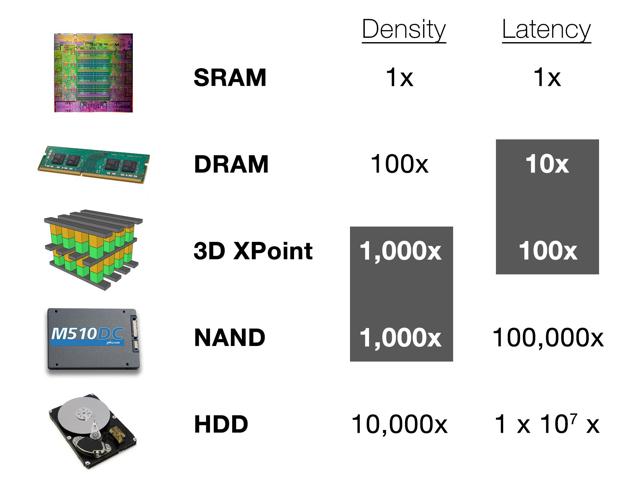

So with persistency out of the way it is also important to note that DRAM is several times faster in terms of raw throughput and thousands of times faster in terms of latency when compared to NAND. This is why we use DRAM for short term storage and NAND for long term permanent storage.

(Chart Courtesy of Zynath Group) (Chart Courtesy of Zynath Group)

Now that we've talked about currently popular memory technologies let's see what is on the horizon for the memory industry.

Upcoming Memory TechnologiesWhile there are many prospective future memory technologies that are currently in development we are going to concentrate on two that are the closest to reality in this section. Namely, we are going to talk about 3D XPoint currently developed by a joint venture between Intel and Micron, and MRAM which is currently being developed by Everspin Technologies.

We actually wrote several articles about Micron and 3D Xpoint which you can read here and here. As a short summary, 3D XPoint is a nonvolatile memory (NVM) technology that shares characteristics of both DRAM and NAND. It has the high density and persistence of NAND memory with the speed, latency, and lifespan much closer to that of DRAM. 3D XPoint uses a chemical called chalcogenide to store data. The chalcogenide changes its physical structure, its phase, from a liquid into a solid and back based on whether it is storing a 0 or 1 in a singular cell. This is why this type of memory is called a phase change memory (NYSE: PCM). Currently, 3D XPoint is at the very early stages of ramping up with significant sales volume predicted for the second half of 2019. It is available under the brand of Optane from Intel and QuantX from Micron.

MRAM is short for magnetoresistive random access memory. This particular memory has a lot of promised benefits. It is persistent, extremely fast, extremely durable, and it has a very low energy impact. That sounds amazing, doesn't it? Well, as usual, the devil is in the details. MRAM is not actually a new concept. The idea behind this memory has existed for decades but the implementation poses significant hurdles.

For instance, those four favorable attributes that we listed above can all be present in an MRAM module however, what is often not stated, is that they are mutually exclusive. Let's take a look at persistence, longevity and energy usage.

To make sure that the MRAM module stores information for a very long period of time you have to significantly increase the electrical load with which magnetic polarity is applied to the memory. However, this increased energy load has a tendency to wear out the memory faster, and obviously makes it less energy efficient. So as a result, you could have an MRAM chip that could store data for a long period of time but it wouldn't be very durable or energy efficient. Alternatively, you can create an MRAM module that can store data for a short period of time, much like DRAM for instance, and much like DRAM it would have high longevity and unlike DRAM, pretty good energy efficiency.

Even with those drawbacks MRAM is still very promising because it can be used as system memory, hybrid memory or storage. However, its other two drawbacks are density and probabilistic writes. In terms of density, it's about as dense as DRAM which means that per square millimeter of die space it can store about as much data as a standard DRAM module. By contrast, 3D XPoint is far more dense. However, the biggest hurdle that MRAM has to overcome is its probabilistic writes. What this means is if you take a piece of data and store it a million times into an MRAM module there is a probability that at least one of those writes will silently fail. This is a hurdle that researchers have been trying to overcome for many years. Everspin Technologies claims that they have overcome this problem in an efficient way, but, it is unclear what kind of yield and pricing Everspin will be able to offer so MRAM is still some years away from mass adoption.

We foresee MRAM one day replacing SRAM (short for static random access memory, the type of memory commonly used on CPU dies) and possibly in the more distant future, DRAM. But first they have to address the cost and economies of scale of MRAM. However, for long term storage and hybrid applications we believe 3D XPoint offers a more promising solution.

Cyclicality in the Memory IndustryAs many of you may have noticed memory companies such as Micron and SK Hynix are trading at ridiculously low multiples. Why is that? In the past, the memory industry specially the DRAM industry has been quite cyclical. Many people think that this has to do with the demand side of the memory market equation. In reality, this is due to the nature of memory manufacturing itself.

A single die of memory carries with it a very high fixed cost and a relatively low incremental cost. I am afraid we'll have to go back to college level economics for this one. As you may remember, fixed costs are costs of factory and manufacturing equipment necessary to produce a product while incremental costs are those associated with producing one more unit of output. As a result, once the factory is up and running to recoup the capital investments as quickly as possible a business would seek to maximize its production capacity. However, of course, in a competitive market with multiple players this quickly leads to margin deterioration.

Think about it this way, let's say the market is willing to pay $10 per widget for 100 widgets but is only willing to pay about $2 per widget for any widget over 100 units per quarter. If your incremental cost per unit is only $1 it makes sense to keep producing those units and sell them even if your total costs of goods sold (incremental + fixed costs) is significantly higher. Of course, if everybody in the industry does the same thing the market becomes overly saturated and prices start to plummet. As prices plummet, it becomes unprofitable to remain in this business and some of the competitors exit the market or go bankrupt. This results in a period of under supply which significantly drives up the prices again if the widget in question, such as memory in our case, is a must have commodity.

Hopefully this explains a little bit why the memory industry in the past has been cyclical. Now let's see why we don't believe it is going to be as cyclical in the foreseeable future.

First, the number of players in the DRAM industry has dwindled down to just 3 major ones: Samsung, SK Hynix, and Micron. And there are a few smaller ones, such as Nanya and some of the Chinese upstarts. The remaining 3 memory giants are very careful to maintain supply/demand equilibrium in the market and control expansion of their capacity in a very strategic and conservative manner. For instance, here are some quotes from the most recent Applied Materials earnings transcript that demonstrate this point:

"More diverse demand drivers spanning consumer and enterprise markets combined with very disciplined investment has reduced cyclicality. We’re not seeing the large fluctuations in wafer fab equipment spending that we did in the past."

"Customers are making rational investments in new capacity resulting in well balanced supply demand dynamics. "

"The supply demand balance has been reasonably [Technical Difficulty] from DRAM standpoint, it’s led to pricing stability. It's something the customers monitor very granularly. And they’ve been very disciplined, from an investment standpoint, making demand led investments."

Source: AMAT Q3 2018 Earnings Call Transcript

Second, as DRAM technology becomes more and more complex and dense it is harder and harder to generate a predictable yield from the start. Let's look at this situation through an analogy. Let's say you build a new factory that is going to produce widgets. However, these new types of widgets that you are producing are very difficult to manufacture. Your factory's maximum capacity is 100 widgets per day but on the opening day of the factory you are only able to produce 10 defect-free widgets. Of course, you are not very happy about this result so you send your best engineers to go and figure out how to reduce the rate of defects. After a few weeks of fine tuning of the production line you are able to make 30 defect-free widgets per day. A few more months and several manufacturing process improvements later your production is up to 50, then 60, and finally you are able to reach your maximum capacity of 100 defect-free widgets per day.

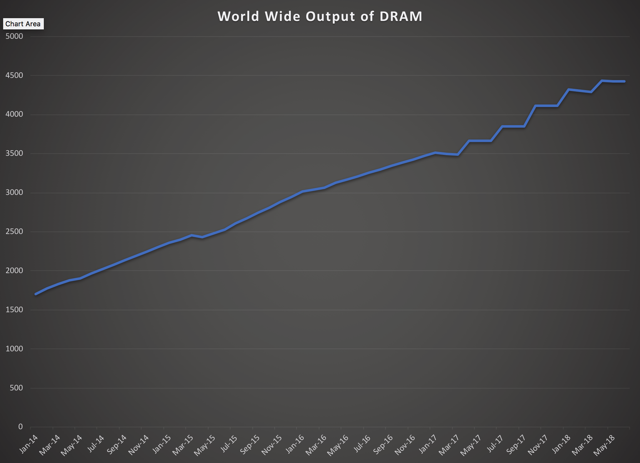

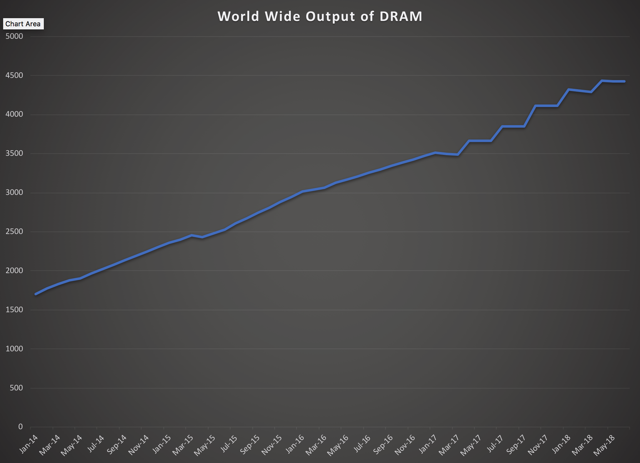

As a result of this production fine tuning process, rather than having a huge capacity step increase all at once, you are actually slowly ramping up capacity. The step-up model in the memory industry used to be common in the past but with the increased difficulty of manufacturing DRAM it has changed to the gradual ramp model.

For the two reasons explained above, we believe that specifically the DRAM market should maintain demand/supply equilibrium in the foreseeable future.

Contract Price vs. Spot PriceSpot prices are prices that smaller OEM manufacturers pay for memory on what is essentially the free-exchange market. By contrast, contract prices are prices OEM manufacturers negotiate ahead of time with memory manufacturers. Think of it as purchasing lumber from Home Depot vs. ordering a large delivery from a lumber yard.

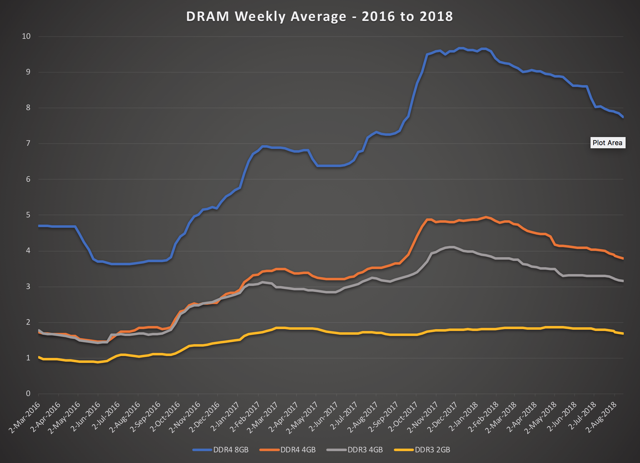

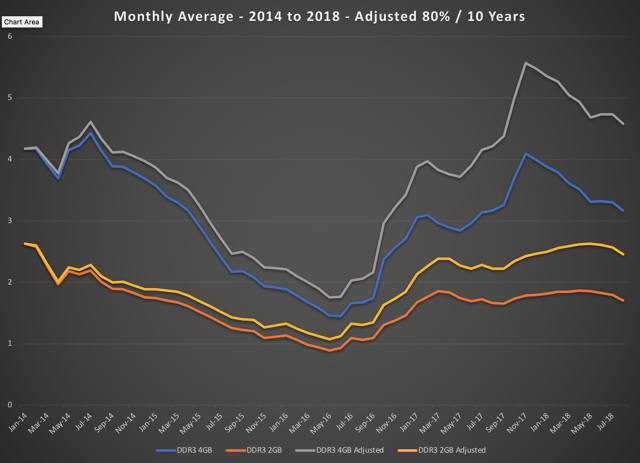

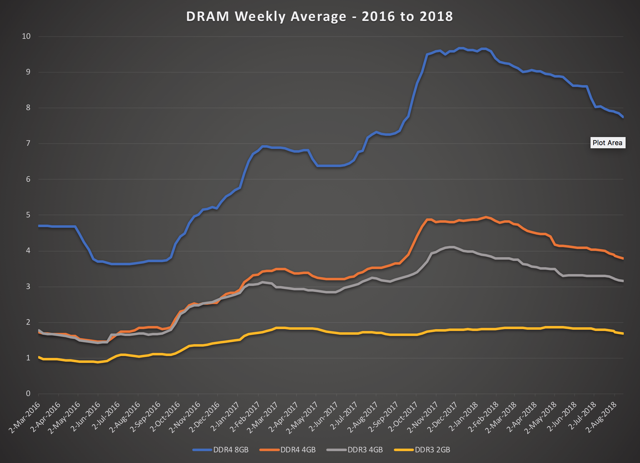

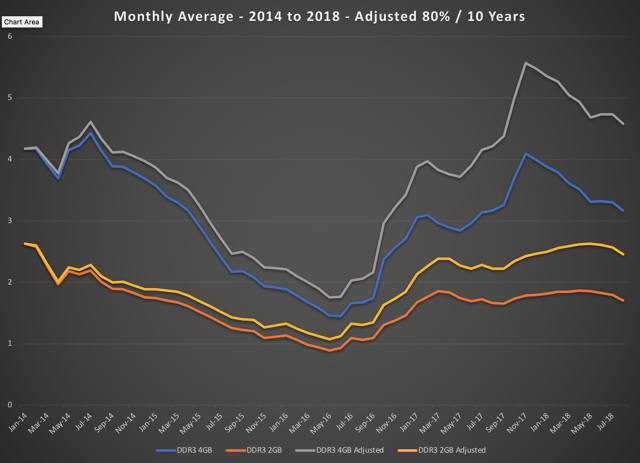

A recent cause for concern for the memory market analysts has been the gradual decrease in DRAM spot prices. In the chart below you can see how spot prices for DRAM have been consistently going up until early 2018 but for the last several months spot prices have been in a gradual decline.

(Chart Courtesy of Zynath Group)

While this does suggest that we have probably reached the peak of the undersupply in balance it does not mean that the DRAM market is about to collapse. Spot prices are tracked for older and somewhat outdated memory types. By contrast, contract prices are usually negotiated for the top of the line memory chips. For instance, Micron was able to actually increase its negotiated contract price for its memory products during the same period as the spot prices have been falling.

(From an extremely insightful article by William Tidwell on Seeking Alpha.)

Below you can see another chart with the spot prices adjusted with an obsolescence rate. Since memory is a high tech commodity, it has a limited shelf life. So paying say $1/GB three years ago is not the same as paying $1/GB today. Memory that was state of the art three years ago is somewhat outdated today. In the chart below we made an assumption that state of the art memory loses 80% of its value over a period of 10 years, a pretty conservative but reasonable assumption. As you can see, when we adjust for obsolescence, current spot prices are still well above the previous peak in 2014.

(Chart Courtesy of Zynath Group)

Another important thing to note is that memory prices are usually tracked in dollars per gigabytes, but companies like Micron or Samsung do not sell "gigabytes" of memory, they sell individual memory chips. Let's look at this dynamic in the next section.

Platters vs. GigabytesSo what do companies like Micron and Samsung actually manufacture? Is it gigabytes of memory? The answer is yes and no. Yes, they do manufacture microchips which can store gigabytes of data, but no, they don't manufacture memory by the gigabyte, they manufacture it by the microchip. And largely speaking, if you don't account for R&D and capital equipment, making a 64GB die costs a company about as much as making a 8GB one, but the 64GB one can be sold for a lot more money. This dynamic is similar to that of old legacy spinning hard drive manufacturers. Think about it, it doesn't cost 10 times more to make a 10TB drive when compared to 1TB.

Because of this price dynamic and the ever-increasing single die capacity it doesn't make sense to track performance of memory companies on a price per GB basis in the long term. A price per wafer is a far more reasonable metric, though such data is not widely available.

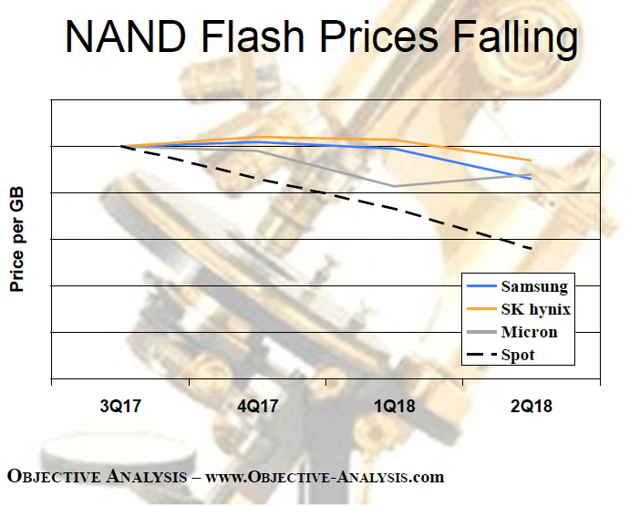

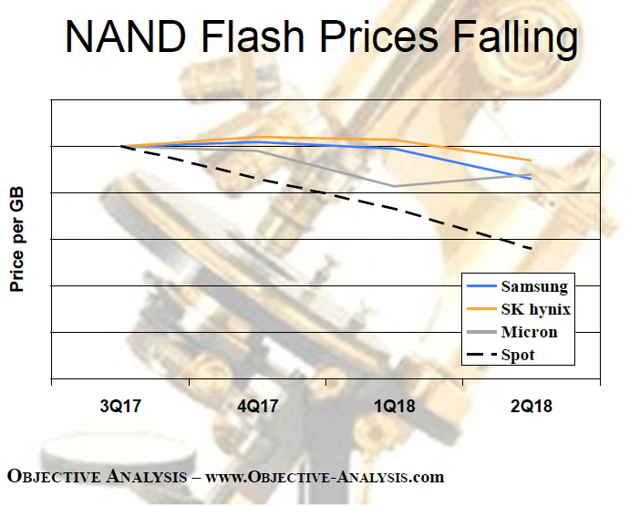

With NAND memory pricing we see a similar situation. NAND memory prices have been on a steady decline, but now you should have a bit of intuition on why that would be the case. As gigabyte content per wafer grows far faster than price per manufactured wafer, price per gigabyte will naturally fall. That's just progress and human ingenuity at work.

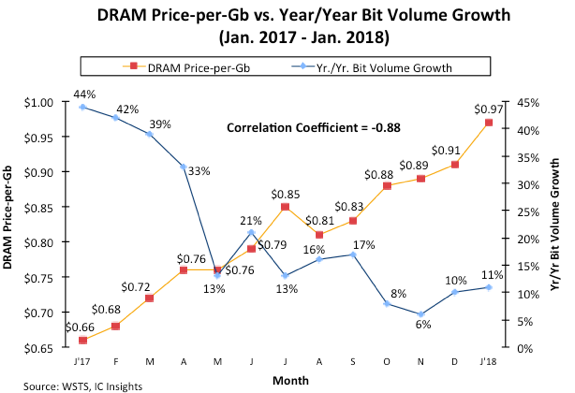

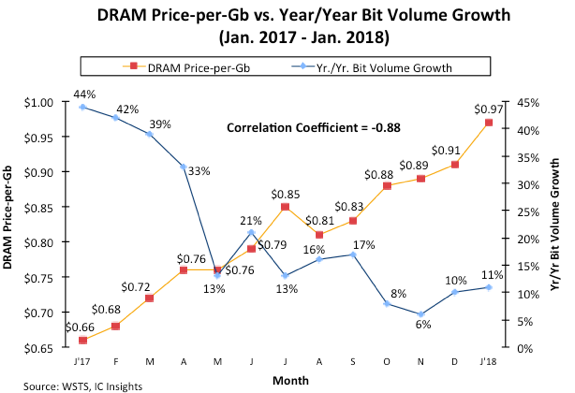

At first glance you might think that surely this means that NAND manufacturers will soon innovate themselves straight out of business. After all, how much memory does one really need. Well, the answer is a lot, more than we can currently make. An interesting chart from below shows DRAM prices and DRAM volume growth, and you can clearly see how OEMs have cut back on DRAM content as the prices were peaking. It's understandable, they need to control their costs as well, but the reverse will be true as soon as the prices start to come down a little.

(Chart Courtesy of ElectroIQ.com)

There are many different reasons why we are going to see an insatiable thirst for memory in the coming years. Let's look at some of these reasons in the next section.

Growth Drivers

ESports - eSports are the wave of the future. As traditional sports are waning in popularity, eSports are on a meteoric rise which is driving a significant uptick in desktop and gaming computer sales.

Self Driving Cars - It is widely estimated that a full self-driving car with level 5 autonomy will require at least 40 GB of DRAM and over a terabyte of NAND storage.

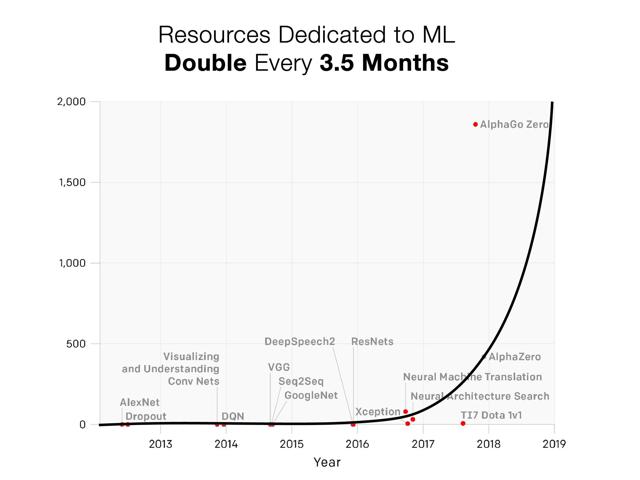

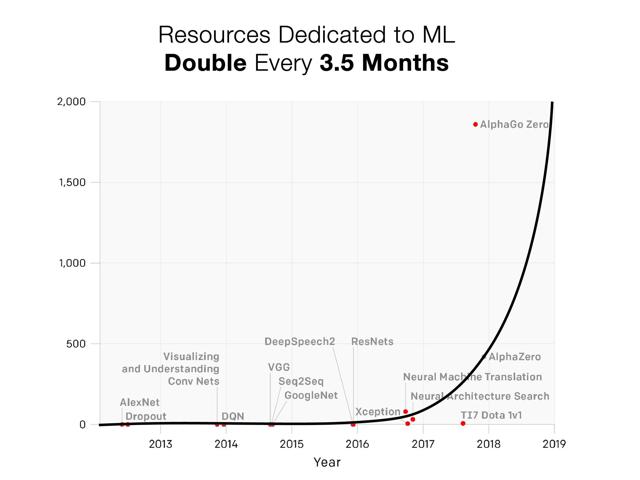

Artificial Intelligence - Machine learning which enables artificial intelligence, and will ultimately enable self-driving cars described above requires a tremendous amount of memory to store millions and millions of datasets and complex AI models. I see our current state of machine learning to be comparable to the internet technology circa 1998 and I strongly believe we are about to witness an explosive growth in machine learning applications and technology. While Moore's Law predicts that computer power would double roughly every 18 months, resources dedicated to machine learning have been doubling, yes, doubling, every 3.5 months over the last several years.

(Chart Courtesy of Zynath Group) (Chart Courtesy of Zynath Group)

5G Infrastructure Updates - As cell phone connections become more ubiquitous and far faster than ever before, people will continue to upload and download videos at unprecedented rates. More data has been generated and stored since 2016 than during the entirety of human civilization in all the years prior, and in the coming year we will double that again. With 5G ubiquitously available this rate of data generation will only increase.

4K Content Proliferation - 4K video content requires 4x more storage than regular HD content and it is on the rise. As more and more households adopt 4K capable television sets the amount of storage required to store 4K content will grow. While of course, this content won't be stored locally, Netflix and Amazon servers also use memory.

Social Media Further Embracing Video - Instagram is a good canary in the coal mine for this one. More and more Instagram creators are switching away from photographs to video content, and that is just the beginning.

(Chart Courtesy of Zynath Group) (Chart Courtesy of Zynath Group)

ConclusionHopefully by now you have a much better intuition about the memory industry and where it is heading in the next couple of years. Of course, this article is just a primer with no specific investment thesis other than we strongly believe that memory in general is a good bet for the foreseeable future. Please feel free to reach out with any additional questions or comments.

Disclosure: I am/we are long MU, INTC..

*******************************************************************************************************************************

UWG

|

(Chart Courtesy of Zynath Group)

(Chart Courtesy of Zynath Group) (Chart Courtesy of Zynath Group)

(Chart Courtesy of Zynath Group)

(Chart Courtesy of Zynath Group)

(Chart Courtesy of Zynath Group) (Chart Courtesy of Zynath Group)

(Chart Courtesy of Zynath Group)