AMD Throws Down Gauntlet to Nvidia with Instinct MI250 Benchmarks

By Anton Shilov

Winning across the board.

(Image credit: AMD)

In an unexpected move, AMD this week published detailed performance numbers of its Instinct MI250 accelerator compared to Nvidia's A100 compute GPU. AMD's card predictably outperformed Nvidia's board in all cases by two or three times. But while it is not uncommon for hardware companies to demonstrate their advantages, detailed performance numbers versus competition are rarely published on official websites. When they do it, it usually means one thing: very high confidence in its products.

Up to Three Times More Performance

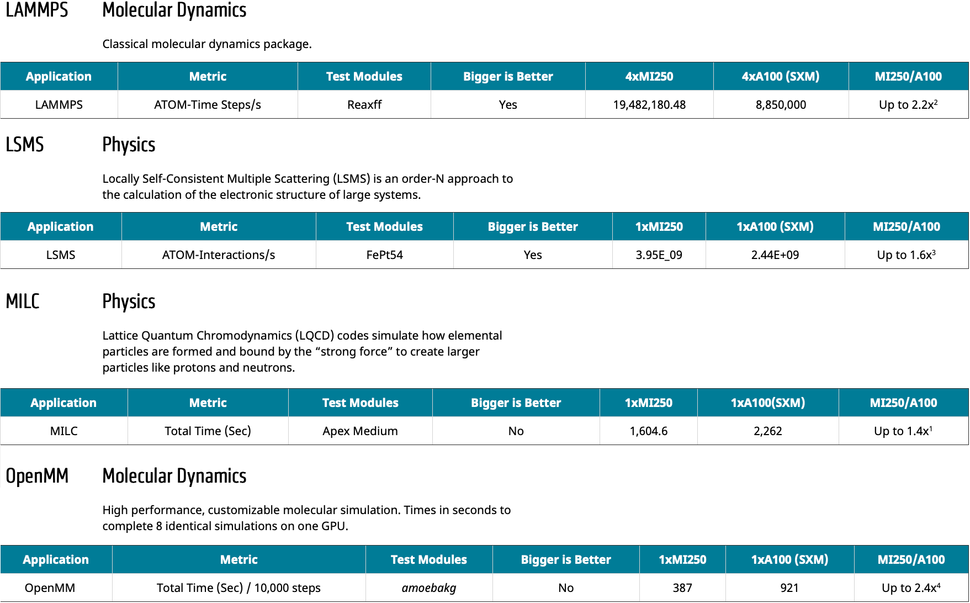

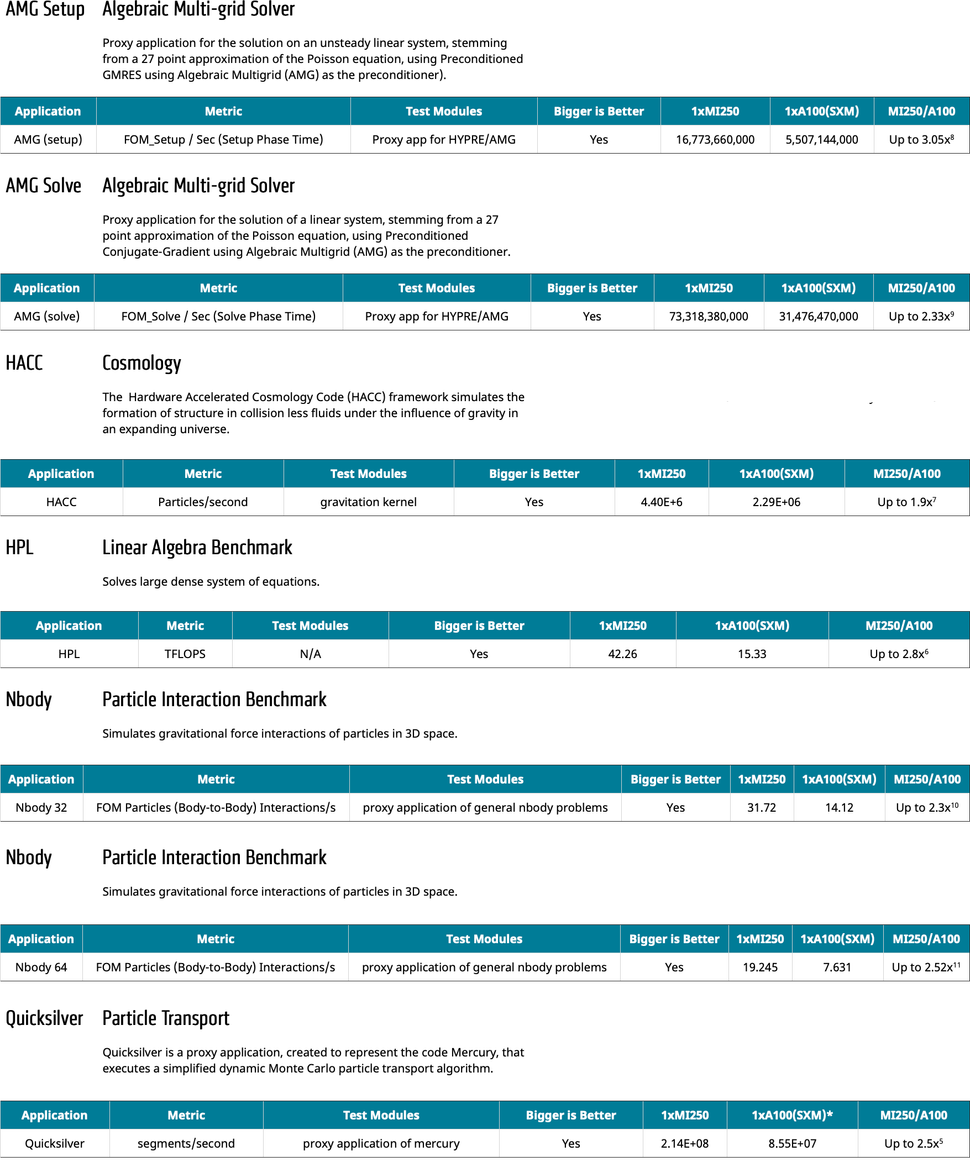

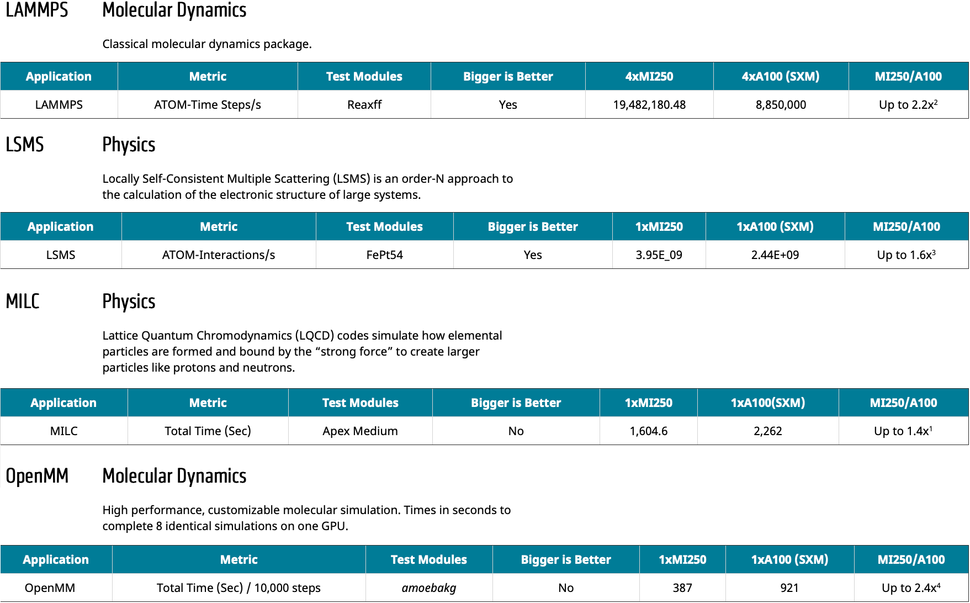

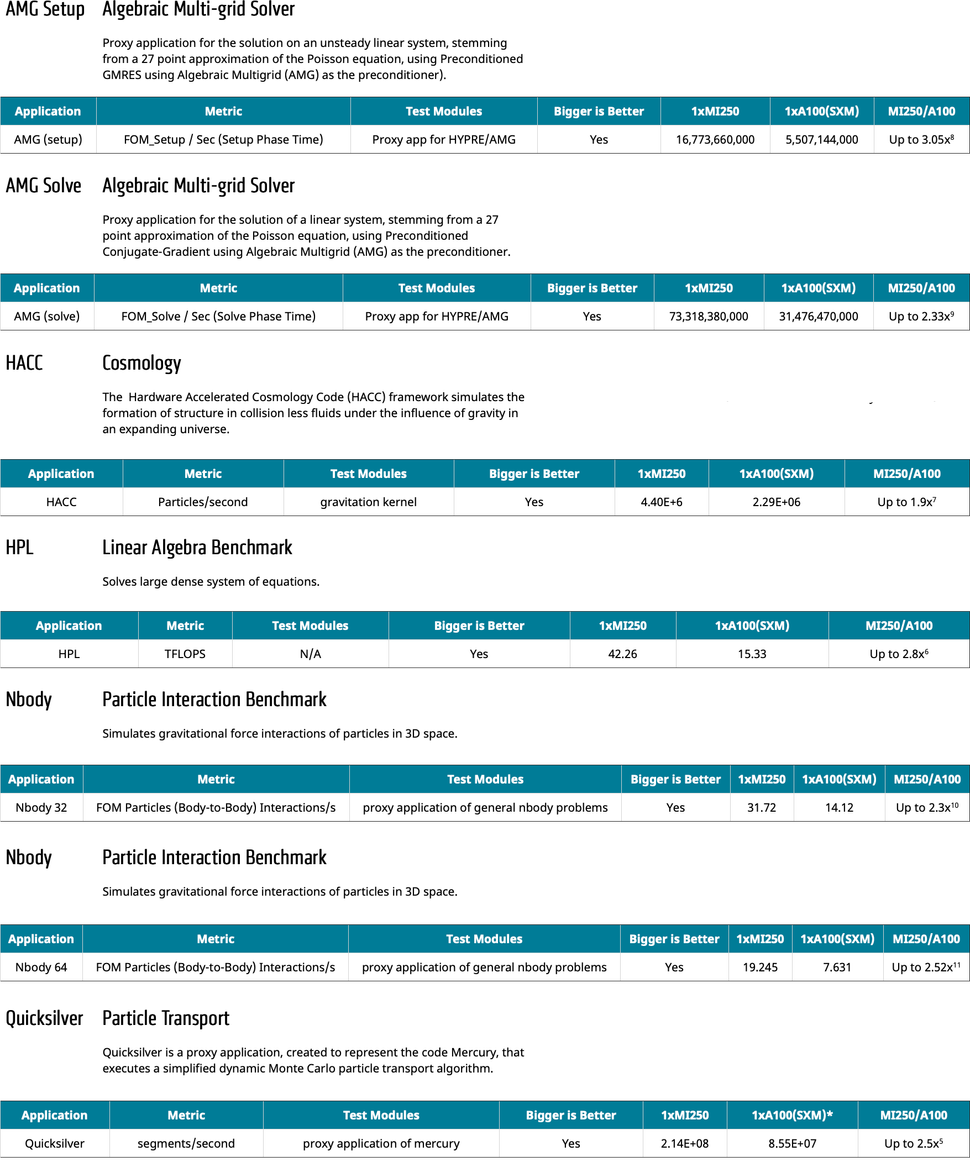

Since AMD's Instinct MI200 is aimed primarily at HPC and AI workloads (and obviously AMD tailored its CDNA 2 more for HPC and supercomputers rather than for AI), AMD tested the competing accelerators in various HPC applications and benchmarks dealing with algebra, physics, cosmology, molecular dynamics, and particle interaction.

There are a number of physics and molecular dynamics HPC applications that are used widely and have industry-recognized tests, such as LAMMPS and OpenMM. These may be considered as real-world workloads and here AMD's MI250X can outperform Nvidia's A100 by 1.4 – 2.4 times.

There are also numerous HPC benchmarks that can mimic real-world algebraic, cosmology, and particle interaction workloads. In these cases, AMD's top-of-the-range compute accelerator is 1.9 – 3.05 times faster than Nvidia's flagship accelerator.

Keeping in mind that AMD's MI250X has considerably more ALUs running at high clocks than Nvidia's A100, it is not surprising that the new card dramatically outperforms its rival. Meanwhile, it is noteworthy that AMD did not run any AI benchmarks.

New Architecture, More ALUs AMD's Instinct MI200 accelerators are powered by the company's latest CDNA 2 architecture that is optimized for high-performance computing (HPC) and will power the upcoming Frontier supercomputer that promises to deliver about 1.5 FP64 TFLOPS of sustained performance The MI200-series OAM boards use AMD's Aldebaran compute GPU that consists of two graphics compute dies (GCDs) that each pack 29.1 billion of transistors, which is slightly more compared to 26.8 billion transistors inside the Navi 21. The GCDs are made using TSMC's N6 fabrication process that enabled AMD to pack slightly more transistors and simplify production process by using extreme ultraviolet lithography on more layers.

AMD's flagship Instinct MI250X accelerator features 14,080 stream processors (220 compute units) and is equipped with 128GB of HBM2E memory. The MI250X compute GPU is rated for 95.7 FP32/FP64 TFLOPS performance (same performance for matrix operations) as well as 383 BF16/INT8/INT4 TFLOPS/TOPS performance.

By contrast, Nvidia's A100 GPU consists of 54.2 billion transistors, has 6,912 active CUDA cores, and is paired with 80GB of HBM2E memory. Performance wise, the accelerator offers 19.5 FP32 TFLOPS, 9.7 FP64 TFLOPS, 19.5 FP64 Tensor TFLOPS, 312 FP16/BF16 TFLOPS, and up to 624 INT8 TOPS (or 1248 TOPS with sparsity).

Even on paper, AMD's Instinct MI200-series offers more performance in traditional HPC and matrix workloads, but Nvidia has an edge in AI cases. These peak performance numbers can be explained with a considerably higher ALU count in case of AMD's MI200-series

Advertisement

To demonstrate how good its flagship compute accelerator Instinct MI250X 128GB HBM2E is, AMD used 1P or 2P 64-core AMD EPYC 7742-based systems equipped with one or four AMD Instinct MI250X 128GB HBM2E compute GPU or one or four Nvidia A100 80GB HBM2E. The company used AMD-optimized and CUDA-optimized software.

SummaryFor now, AMD's Instinct MI250X is the world's highest-performing HPC accelerator, according to its own data. Considering the fact that the Aldebaran has a whopping 14,080 ALUs and is rated for 95.7 FP32/FP64 TFLOPS performance, it is indeed the fastest compute GPU around.

Meanwhile, AMD launched its Instinct MI250X about 1.5 years after Nvidia's A100 and several months before Intel's Ponte Vecchio. It is natural for a 2021 compute accelerator to outperform its rival introduced over a year ago, but what we are curious about is how this GPU will stack against Intel's supercomputer-bound compute Ponte Vecchio GPU.

tomshardware.com |