End-to-End Recommender Systems with Merlin: Part 1

Aryan Gupta

A tour for implementing end to end RecSys with Merlin on Criteo dataset

NVIDIA Merlin™ is an open-source framework for building large-scale deep learning recommender systems. This series of articles will dive deep into the Merlin SDKs, and implement End-to-End Recommender Systems on Criteo Dataset in 3 parts, PART 1: ETL, PART 2: TRAINING, PART 3: INFERENCE.

RECOMMENDER SYSTEMS AT A GLANCE

Figure 1: NVIDIA MerlinRecommender Systems (RecSys) has become a very important component in today’s modern world. It’s a vital component of many industries providing e-services ranging from news services, social media to online video streaming platforms. The data generated from these industries are even termed to be costlier than the gold. A scalable end-to-end RecSys pipeline captures user behaviour analytics and provides personalized recommendations to the user.

Figure 2: RecSys at a GlanceThe goal of a recommender system is to find the most clickable (likeable) items for a given user. It achieves its goal by efficiently analyzing the data gathered regarding user interactions, which involves- likes, clicks, watch times, purchases, and session-based product impressions. It eases out knowledge and data overloads by recommending personalized content, and compatible products from a global product space. It should then rank them and display the top-N items to the user. It is achieved by estimating the likelihood of a particular item being clicked by a specific user. The process of achieving this is referred to as CTRs (Click Through Rate) estimations.

In this article, we are going to develop an end-to-end RecSys pipeline using the Merlin architecture on Criteo Display Advertisement Dataset.

DATASETDataset plays a crucial role in an efficient CTR estimation. This estimation is accompanied by a combination of a rich dataset having historical user-item interaction consisting of features like- age, type, price, gender, clicked by the user (0 or 1). Several ETL (Extract-Transform-Load) algorithms are applied on top of it. Followed by this, these transformed workflows are trained with state-of-the-art intelligent models for CTR estimations.

Figure 3: Criteo AI LabsThere exists a range of datasets that are prevalent in the domain of recommendation systems. One such dataset is termed as Criteo Display Advertising dataset that contains data on millions of display ads and their click feedback. It is focused on measuring the prediction of clickthrough rate (CTR).

DATA DESCRIPTIONThe dataset consists of a portion of the company’s online traffic for a period of 24 days. The first column indicates whether a display ad has been clicked.

There are 13 integral features taking integer values and 26 features taking the categorical features. These integer values are referred to as dense values, contrarily the categorical values are referred to as sparse values, once embedded in the form of vectors. The columns are separated by tags which are defined by the schema mentioned below:

<label> <integer feature 1> … <integer feature 13> <categorical feature 1> … <categorical feature 26>

The above-mentioned dataset can be downloaded from the below link:

azuremlsampleexperiments.blob.core.windows.net, where XX goes from 0 to 23

A sample script has been developed that easily downloads data for all the days. It is mentioned below:

The file named ‘filename.txt’ contains the download links of the data for all 24 days. Each file is approximately 15GB compressed .gz in size and the uncompressed version extends up to 50 GB in size. The total size of the dataset is approximately 1.3TB.

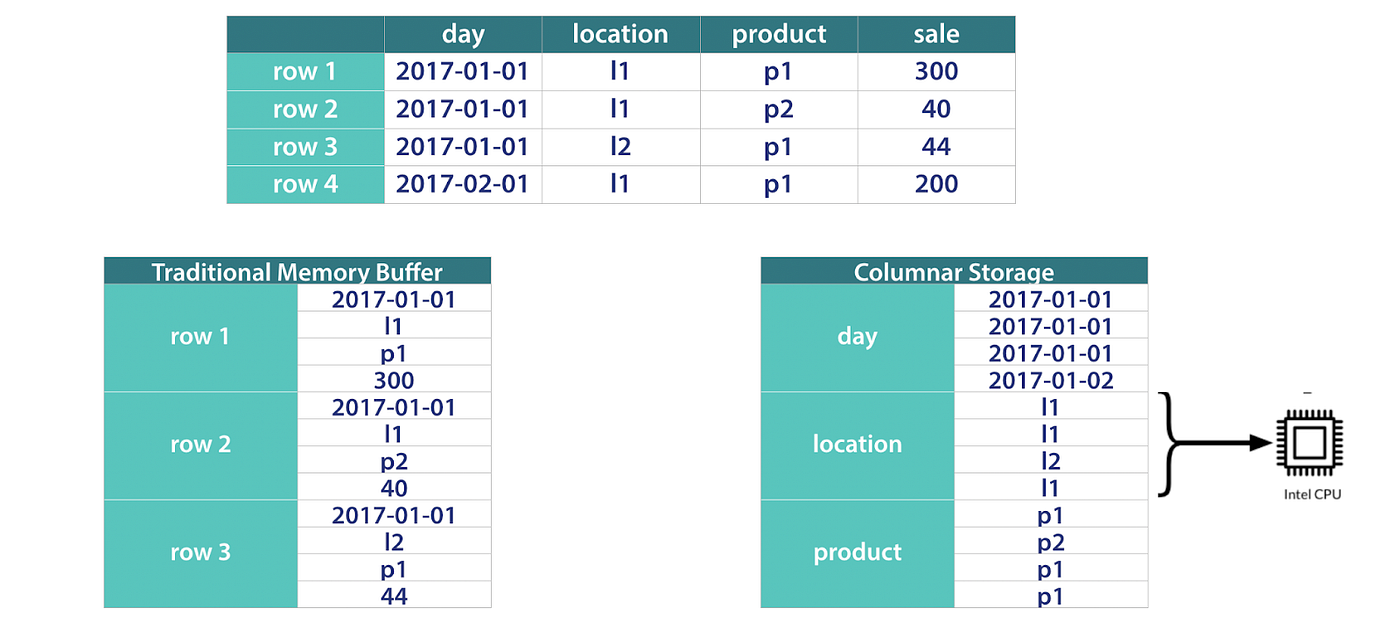

Parquet ConversionOnce the dataset has been downloaded the next step is to convert these datasets into a format that supports faster data processing characteristics for larger datasets. One such format is that of Apache Parquet that supports columnar data compression by incorporating several encoding methods. One such columnar format of data storage is illustrated in the figure below.

Figure 4: Comparison of Traditional vs Columnar Data StorageThe figure clearly explains the difference between a Traditional Memory Buffer vs. a Parquet-based Columnar Data Storage schema. As a result, Parquet is better optimized for performance, instead of row-aligned CSV file formats. Data lookup and query execution are faster.

Considering these advantages, the Criteo Dataset is converted to a parquet-based columnar schema.

The APIs inside Merlin’s NVTabular SDKs that have helped in achieving this are:

- nvtabular.Dataset: to load the dataset in the NVTabluar format inside MERLIN SDKs.

- to_parquet: to convert into the parquet version inside the MERLIN SDKs

- For a speed boost, the entire task has been scaled over a multi GPU cluster using DASK and LocalCUDACluster, applying cluster computing principles.

The entire conversion task took 5 min 59 sec for conversion over a Dual Quadro RTX 8000 GPU.

Remainder of lengthy blog is at:

medium.com@aryan.gupta18/end-to-end-recommender-systems-with-merlin-part-1-89fabe2fa05b |