Vera Rubin bit intense drop-in Blackwell replacement.

Rolling MoAPS made easy.

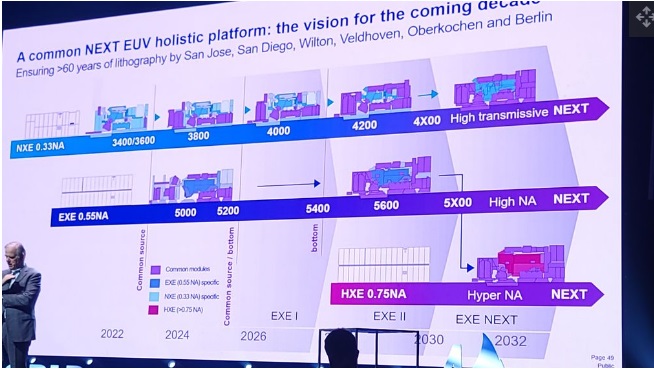

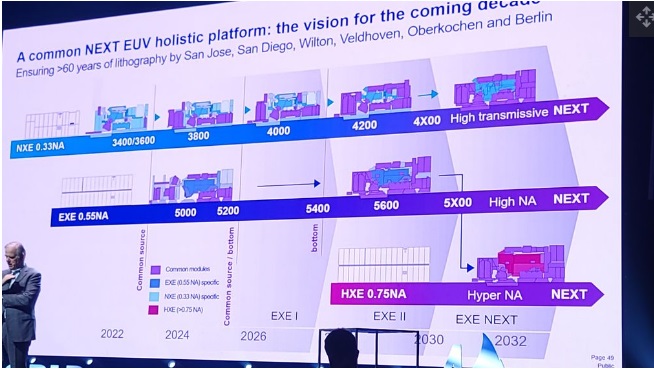

ASML clear '32 roadmap**

Could Vera Rubin spark another AI wave? Many AI experts agree that the industry’s next major leap won't come from smarter algorithms, but from faster, denser infrastructure, and Rubin is right in that lucrative wheelhouse.

With its effective new rack-scale design and economics, analysts say Rubin could potentially unlock the next wave of AI adoption across hyperscalers and enterprises.

Here’s why Vera Rubin matters

- Bigger context windows: With a whopping 100 TB of rack memory and 1.7 PB/s bandwidth, Rubin is able to handle colossal datasets and prompts on-rack, cutting latency and chatter.

- Better ROI math: Nvidia claims customers can effectively monetize nearly $5 billion in token revenue per $100 million invested in Rubin infrastructure. If that claim is remotely accurate, that substantially slashes inference costs while making always-on copilots commercially viable.

- Predictable rollout: Nvidia’s steady cadence de-risks hyperscaler planning where buyers can easily deploy Blackwell now and slot Rubin in 2026 without retooling their stacks*.

- Higher pricing power: With HBM4, NVLink 144, Spectrum X networking, along with full rack-scale integration, Vera Rubin is likely to carry significantly higher ASPs than Blackwell, expanding the company's gross margins in the process.

Why Nvidia’s Vera Rubin may unleash another AI wave

*Copilot:

Yes, that statement suggests Rubin GPUs are designed to be drop-in compatible with Blackwell systems, minimizing the need for major infrastructure changes.

Here’s what that means in practical terms for data centers:

Architectural Continuity Between Blackwell and Rubin

- Shared packaging and interconnects: Both Blackwell and Rubin GPUs use CoWoS-L packaging and NVLink 5.0 or higher, meaning the physical and electrical interfaces are consistent.

- Rack-level compatibility: Rubin GPUs (like the VR200 series) are expected to fit into existing Blackwell-based racks such as NVL72 or NVL576, especially those using liquid cooling and high-density layouts.

- Power and thermal scaling: While Rubin GPUs have higher TDPs (up to 1800W vs. Blackwell’s 1400W), NVIDIA’s roadmap anticipates this and aligns cooling and power delivery standards accordingly.

Software and AI Stack Alignment

- CUDA and Transformer Engine continuity: Rubin builds on Blackwell’s Transformer Engine and mixed-precision compute logic, so AI frameworks optimized for Blackwell (e.g., PyTorch, TensorFlow) will run efficiently on Rubin with minimal tuning.

- Tensor Core evolution: Rubin doubles down on attention-layer acceleration and supports newer FP4/FP6 formats, but these are additive—existing FP8/BF16 workloads remain compatible.

Operational Implications

- No need to retool server chassis or networking fabric if the data center is already Blackwell-ready.

- Procurement and deployment cycles can be staggered: deploy Blackwell now, then upgrade to Rubin in 2026 without forklift upgrades or downtime.

In short, Rubin is architected as a forward-compatible successor to Blackwell, allowing data centers to scale performance without re-engineering their stacks. If you're planning deployments now, Blackwell gives you a smooth on-ramp to Rubin’s capabilities later.

Want to dig into how Rubin’s chiplet layout or memory bandwidth might affect inference latency or training throughput?

**

PS

How soon NXT:2200i?

e6P?

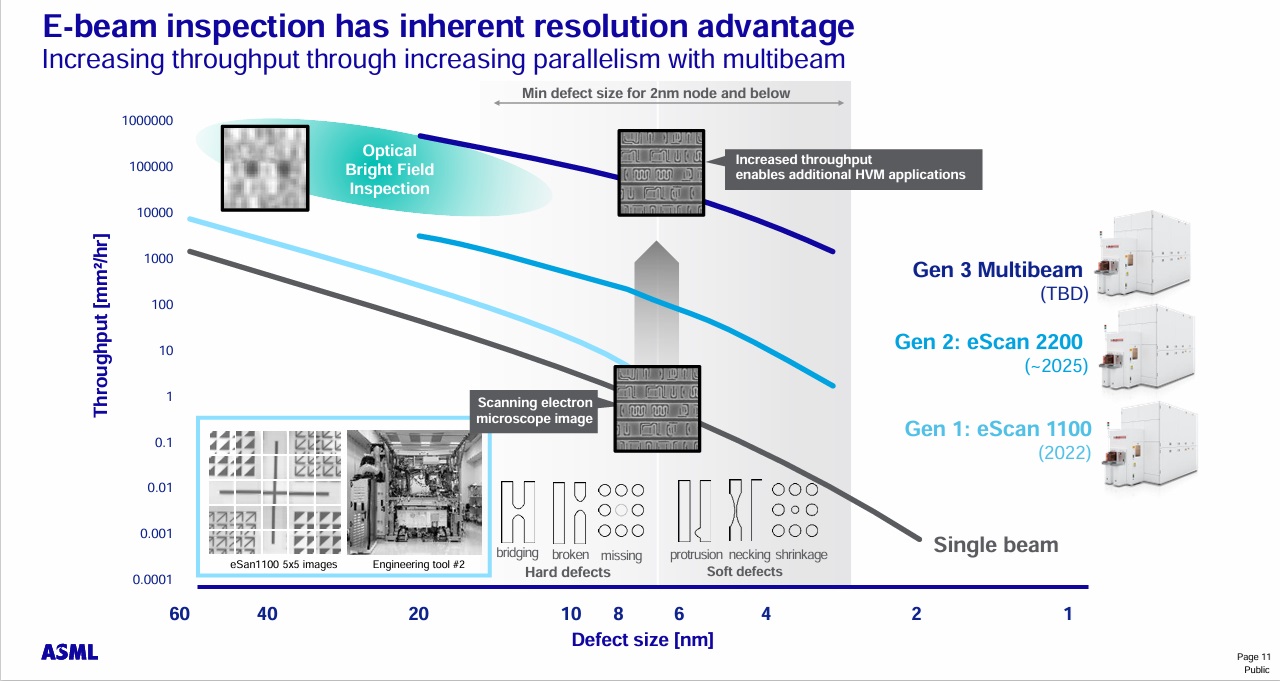

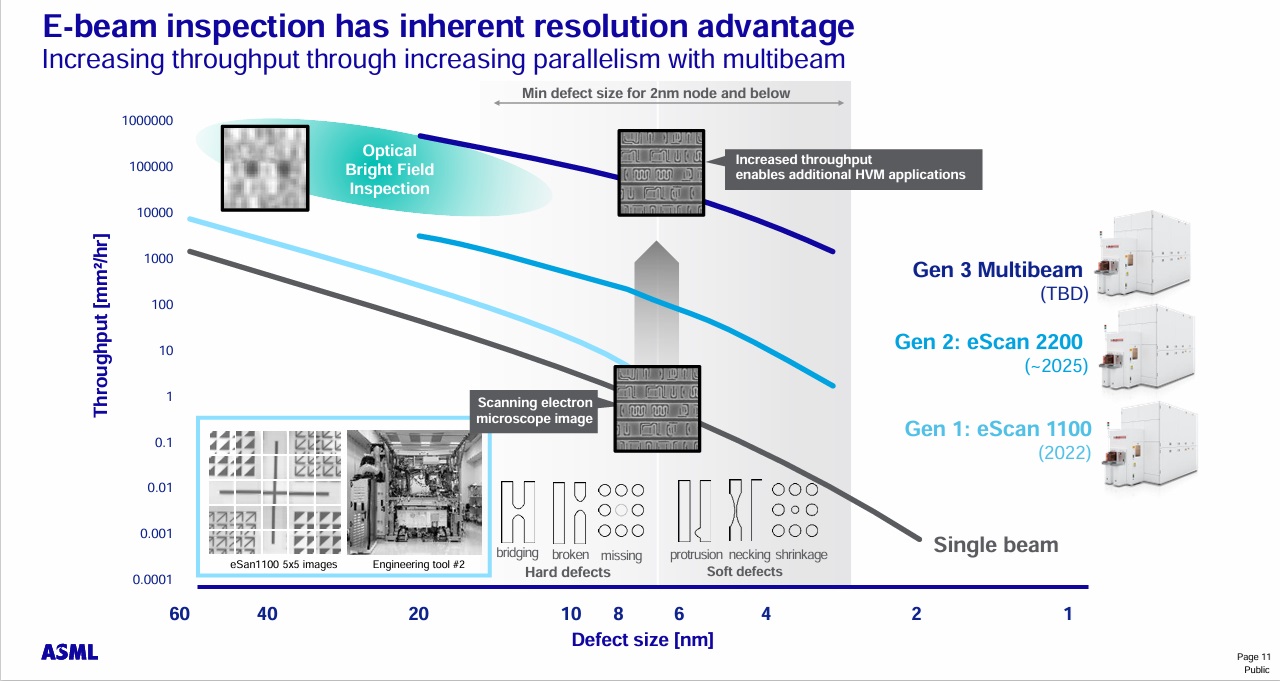

eScan 2200?

With Village all in? |