Really interesting article on the future of memory:

full article at x.com

The Future of the Memory Business in the AI Era

Introduction: A 70-Year Transformation

The memory semiconductor industry is changing its identity. Since Intel commercialized DRAM in the 1970s, memory has been a commodity for over 50 years. Faster, bigger, cheaper. Manufacturers evolved their fabrication processes following Moore's Law, and the market cycled through growth and decline with PC, smartphone, and data center booms.

But starting in 2024, the rules changed.

Micron sees the HBM TAM (Total Addressable Market) growing from $35 billion in 2025 to $100 billion in 2028, two years ahead of original forecasts. The current market expects HBM revenue to nearly double to $34 billion in 2025 and account for half of the DRAM market by 2030.

This isn't just another memory cycle boom. It marks a structural transformation where memory evolves from commodity to strategic asset, and ultimately to platform.

People who view memory purely through market logic see "another boom-bust cycle" in this upturn. But those who also understand the technology see "the reinvention of computing." And history has proven the latter correct.

Part 1: The Memory Wall Becomes Real

1.1 Exploding Demand

AI accelerator performance is advancing far faster than memory bandwidth. From H100's 80GB to GB200's 192GB to Rubin Ultra's 1TB. HBM consumption per GPU has increased 3.6x.

The problem is this isn't the end. Every time HBM capacity and bandwidth increase generation over generation, designers immediately fill that space by increasing parameter counts, context lengths, and KVCache. Memory is always the next bottleneck.

It's a kind of Jevons Paradox. GPU performance doubles annually but memory bandwidth only grows 20-30% per year. This gap has become the memory wall, the core industry issue of 2025.

1.2 Triple Bottleneck

HBM shortage isn't simply a DRAM production problem. Three bottlenecks operate simultaneously.

Wafer reallocation. HBM requires additional processes like TSV, thinning, and stacking. The industry estimates roughly 3x more wafers are needed to produce the same capacity. When SK Hynix allocates wafers to HBM, DDR5 production decreases.

Advanced packaging shortage. TSMC is aggressively expanding CoWoS but demand is too strong. CoWoS-L surged year over year in 2025, and while TSMC expanded to 50,000 wafers per month, it's still insufficient.

Strategic preemption. In July 2025, OpenAI and Microsoft reportedly secured 900,000 DRAM wafers monthly from Samsung and SK Hynix for Project Stargate. That's 35-40% of global capacity. NVIDIA has locked up most of SK Hynix's HBM through 2026.

1.3 The Reality in Numbers

Supply: HBM is sold out through 2026 for SK Hynix, Micron, and Samsung. Major hyperscalers and GPU manufacturers have locked in over 90% of 2026 production capacity.

Pricing: According to TrendForce, HBM3E in Q2 2025 cost 4-5x more than server DDR5. This gap is expected to narrow to 1-2x by late 2026. Ironically, not because HBM got cheaper, but because DDR5 went up.

Inventory: Inventory is rapidly depleting, strengthening suppliers' pricing power.

After Micron's Q4 2025 results of $11.3 billion, they wound down some Crucial consumer product lines. They're strengthening resource allocation focused on strategic accounts. Abandoning parts of the consumer market to concentrate on hyperscalers. Extreme but logical. Higher margins, stable relationships, strong lock-in.

Part 2: The Death of Commodity

2.1 The Emergence of Custom HBM

Until 2024, memory followed JEDEC standards as generic products. But starting with HBM4, the rules completely changed.

Custom HBM optimizes data processing for customer requirements by moving specific system semiconductor functions into the HBM base die. SK Hynix unveiled cHBM at CES 2026, demonstrating a design that integrates some computation and control functions previously handled by GPUs or ASICs into HBM.

Marvell's custom HBM showed 25% compute area increase, 33% memory capacity increase, and 70% interface power reduction.

Samsung Electronics expects custom HBM market share to exceed 50% in the near future. Some manufacturers forecast 30-40% by 2030.

Damnang2

@damnang2

·

Jan 23

What is Custom HBM? Why the Rules of the HBM Market Are Changing

What's Happening in the HBM Market in 2026 As of January 2026, Samsung, SK hynix, and Micron have all delivered paid HBM4 samples to NVIDIA. This signals a move beyond free prototypes into the...

2.2 Defining the Platform

In the custom HBM era, memory companies evolve from component suppliers to platform providers.

Technical elements: custom base die design (3nm to 5nm logic), RAS feature integration, power optimization, thermal management, advanced packaging integration (TSV, HCB, CoWoS).

Business elements: multi-year co-development (2-3 year roadmap alignment), long-term price and volume contracts, shared validation systems, software stack optimization cooperation.

This is the mechanism that creates ecosystem lock-in.

SK Hynix is developing next-generation HBM base dies in collaboration with NVIDIA and TSMC. NVIDIA urging SK Hynix to accelerate HBM4 by 6 months wasn't just a supply contract. It's roadmap alignment, early access, joint optimization.

Memory is no longer "dumb storage" but "intelligent processing." Pricing power recovers, and multi-year customer relationships exponentially increase switching costs.

Part 3: Threat Assessment

3.1 Intel's ZAM

Intel has returned to the memory market. Intel and SoftBank subsidiary Saimemory are co-developing Z-Angle Memory. Designed to compete with HBM, claiming higher capacity, greater bandwidth, and half the power consumption.

It's symbolic as their first memory market entry since the 1980s.

But the threat is necessarily limited. Saimemory plans first prototype in 2027, mass production in 2029. 2029 is too late. By then HBM4/HBM4E will be mature, the custom HBM ecosystem will be entrenched, and multi-year contracts with NVIDIA, Google, and Amazon will be locked in.

More importantly, Intel's foundry business itself is uncertain.

3.2 China's Pursuit

China has made HBM a strategic priority. They've planned $200 billion in subsidies for domestic semiconductors over the next five years, with significant portions going to HBM.

CXMT is aggressively expanding HBM capacity. They've stockpiled large quantities of equipment in preparation for export controls. HBM2 8-high entered mass production in H1 2025, with TSV capacity expected to match Micron's by year-end.

Huawei affiliates are also moving. XMC produces HBM wafers while SJSemi handles packaging.

But their real challenge is packaging. The biggest hurdle in HBM ramping is the combination of DRAM die production, stacking, and interconnection. One misstep ruins the entire package.

In this respect, Chinese memory companies face structural disadvantages. Taiwan accounts for over 70% of global logic advanced packaging, and Korea holds 85% of HBM advanced packaging.

China will certainly mass-produce HBM3 in 2026-2027. But back-end throughput and yield will constrain supply. And by then, leading companies will have already fully transitioned to HBM4.

3.3 Hyperscaler Counterattack

The most unexpected threats always come from customers.

In 2025-2026, Google, Microsoft, Meta, and Amazon are aggressively deploying custom AI silicon beyond the hardware development "experimental" phase. An attempt to break free from dependence on third-party hardware providers.

Major lineup: Google: TPU v7 (Ironwood), 4.6 PFLOPS FP8, co-developed with Broadcom Microsoft: Maia 100 (handling Azure OpenAI inference), Maia 200 (2026 mass production) Amazon: Trainium2, Inferentia2, claiming 50% better price-performance Meta: Artemis (inference), MTIA (recommendation) OpenAI: Titan (Broadcom development, H2 2026 shipment)

What This Means for Memory Companies

This structure could be either threat or opportunity for memory companies.

Threat: Reduced NVIDIA dependence pressures diversification for memory companies' largest customer. Custom ASICs optimize for specific workloads, diversifying HBM specs. The shift toward inference creates different memory requirements than training.

Opportunity: Custom ASICs also need HBM, so total demand continues growing. More design partners expand custom HBM opportunities. Samsung is actively pursuing this. ASIC customer base diversification bypasses NVIDIA-SK Hynix monopoly.

3.4 The Geopolitical Paradox

Throughout 2025, Samsung and SK Hynix halted sales of older semiconductor equipment to Chinese companies due to US regulatory backlash and tariff concerns. This effectively limited production capacity in that region.

But paradoxically, geopolitical tensions may work in favor of the existing memory trio. With China blocked, Intel/Saimemory not ready yet, and hyperscalers signing multi-year contracts with existing suppliers for supply stability, the current oligopoly structure can only become more entrenched.

Part 4: Why This Time Is Different

"When the AI bubble bursts, memory demand disappears" is personally not correct in my view. Seeing this cycle the same as past ones is like viewing the smartphone revolution as "just another PC cycle."

4.1 Exploding Switching Costs

Currently, big tech companies like NVIDIA, Amazon, Microsoft, Broadcom, and Marvell are leading custom HBM. When they co-design custom base dies with SK Hynix/Micron/Samsung, integrate logic, and optimize packaging, this isn't a simple supply contract but a multi-year, multi-billion dollar co-engineering partnership.

Starting a custom HBM design with a new vendor means 18-24 months, reworking logic integration and software stack, re-integrating packaging processes, and repeating the yield learning curve. In other words, an entirely different memory cycle structure can emerge from the previous commodity business structure.

4.2 Inference Eats More Memory Than Training

Future AI trends will increasingly shift to inference. And inference is far more memory-intensive than training. As AI computing transitions from training to inference, inference workloads are expected to account for 67% of total AI compute by 2026.

4.3 The Roadmap Is Already Set Through 2035

The HBM roadmap is long-term. Each generation brings capacity increases and 2-3x bandwidth growth. This isn't a demand forecast. It's a physics roadmap. Regardless of AI demand, transistors keep shrinking, stacking keeps rising, interfaces keep widening.

Part 5: Responding to Market Pessimism

In the first week of February 2026, the market shook as Big Tech earnings season ended. Amazon stock fell 11%, Microsoft dropped 17% year-to-date. The tech-heavy Nasdaq declined 4% over a week.

The reason investors were alarmed is simple. The 2026 CapEx scale was too large.

The Reality in Numbers: Amazon, Alphabet, Meta, Microsoft combined: approximately $630-650B Amazon alone: $200B (50%+ increase from 2025's $130B) Alphabet: $175-185B (nearly double 2025) Meta: $115-135B (nearly double from 2025's $72B) Microsoft: approximately $145B (analyst estimates)

A 60% increase from 2025's $400B. Compared to 2024's $245B, a 165% increase. Fortune reported "Big Tech AI spending approaches Sweden's economy size."

The problem is FCF (free cash flow). Morgan Stanley forecasts Amazon at approximately $17B negative FCF in 2026, while Bank of America sees a $28B deficit. Barclays analyzes that Meta's FCF could plunge 90% and turn negative in 2027-2028.

Seeing these numbers, market pessimists attack.

Core Pessimistic Arguments: "SaaS profitability is collapsing. AI service pricing is collapsing in a chicken game. When hyperscalers reduce NVIDIA dependence with in-house chips, HBM demand will also decline. When Samsung attacks with pricing, HBM premium collapses and a paradoxical recession comes. Chinese low-price offensive will cool the entire memory sector."

But this logic is wrong in several places.

Rebuttal 1: CapEx Didn't Shrink, It Exploded

The connection that poor SaaS profitability leads to AI infrastructure collapse is excessive. SaaS is one pillar of cloud demand, but the main driver of AI infrastructure investment isn't "SaaS subscription growth" but data center expansion for model training and inference.

Did Big Tech CapEx actually decrease in 2026? No. It exploded to $630-650B. Amazon CEO Andy Jassy stated "Due to tremendous demand for AI, chips, robotics, and LEO satellites, we'll invest approximately $200B in 2026 and expect strong long-term ROIC."

Rebuttal 2: Price Cuts Are Tiering, Not a Chicken Game

It's confirmed that Google introduced low-price plans. But they also operate high-price plans simultaneously. Google AI Plus expanded to $7.99/month while also running ultra-premium plans like $249.99/month.

Rather than explaining it as one chicken game, it's a typical portfolio strategy running both "entry-level freemium to paid conversion" and "professional high-price tier" simultaneously. Low-price plans are user conversion funnels, not market destruction.

SaaS isn't collapsing but restructuring its pricing model. That process creates margin volatility, not demand collapse.

Rebuttal 3: In-House Chips Don't Reduce HBM Demand

The idea that in-house chip expansion leads to reduced HBM demand is a leap. Actually, the opposite.

As custom ASICs proliferate, memory shifts faster toward custom HBM platform based on co-design partnerships rather than remaining commodity. As the single GPU channel weakens, memory companies accelerate "multi-channel platformization."

Broadcom mentioning that AI semiconductor opportunity could grow to $60-90B by 2027 strengthens the perspective that it's not "one GPU company cycle" but "the entire AI infrastructure pie" growing.

As covered in Part 3, Samsung has already restructured its strategy around securing custom ASIC customers. According to industry reports, a significant portion of Samsung's 2026 HBM output could go to custom ASIC customers. An important hedge when GPU platform awards are highly competitive.

Rebuttal 4: Samsung's Price Offensive Is Condition Adjustment, Not Recession

Reports exist that Samsung is attempting to catch up with an HBM price cut card. But simultaneously, there are also reports that Samsung itself mentions 2026-2027 supply tightness and that memory price upward pressure may continue.

Putting both together, the conclusion is this. Price cuts are likely "condition adjustments" over limited volume rather than "demand collapse dumping."

Due to qualification, yield, and packaging capacity constraints, it's structurally difficult for any one company to deliberately oversupply the HBM market. Major hyperscalers and GPU manufacturers have locked in over 90% of 2026 production.

In this situation, "paradoxical recession" is certainly a possible scenario, but its likelihood should be seen as very slim.

Rebuttal 5: Chinese Offensive Only Applies to Commodity

Market rumors existed that Chinese companies were dumping DDR4. Recently, supply chain observations suggest they "abandoned low-price strategy and pivoted to DDR5."

But critically, HBM isn't simple bit supply but platform competition bundling TSV, stacking, packaging, and customer co-validation. Hard to penetrate with just "low-price volume."

Chinese low-price pressure can create commodity DRAM downside but should be viewed separately from HBM's platform domain pricing structure.

Upstream Pressure Strengthens Platform Transition

The core error of market pessimism is bundling different markets together. SaaS, AI service pricing, HBM, commodity DRAM all have different dynamics.

More importantly, this pessimism paradoxically strengthens the memory optimism thesis.

SaaS monetization wavers ? Big Tech pursues efficiency through in-house chips and system optimization ? Memory becomes not more generic but "components that stick to and optimize for each customer."

This flow doesn't weaken "memory platformization" but strengthens it.

Upstream profitability pressure may be real. But that doesn't mean infrastructure collapse. CapEx is still at all-time highs. Price competition is price structure tiering, not a single chicken game. In-house chip expansion calls for custom HBM and co-design expansion more than HBM demand reduction.

Investors are anxious, true. But the reason they're anxious isn't "because there's no demand" but "because they're spending too much." And that "too much" will ultimately flow to HBM.

Part 6: Three Technologies Changing Market Structure

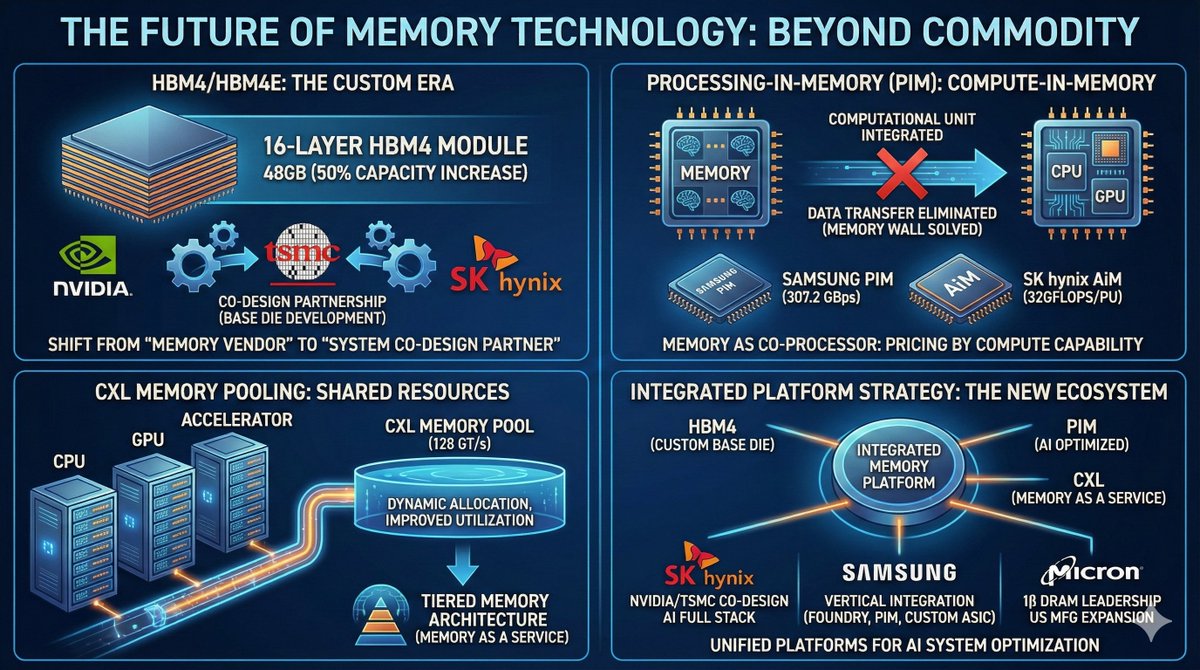

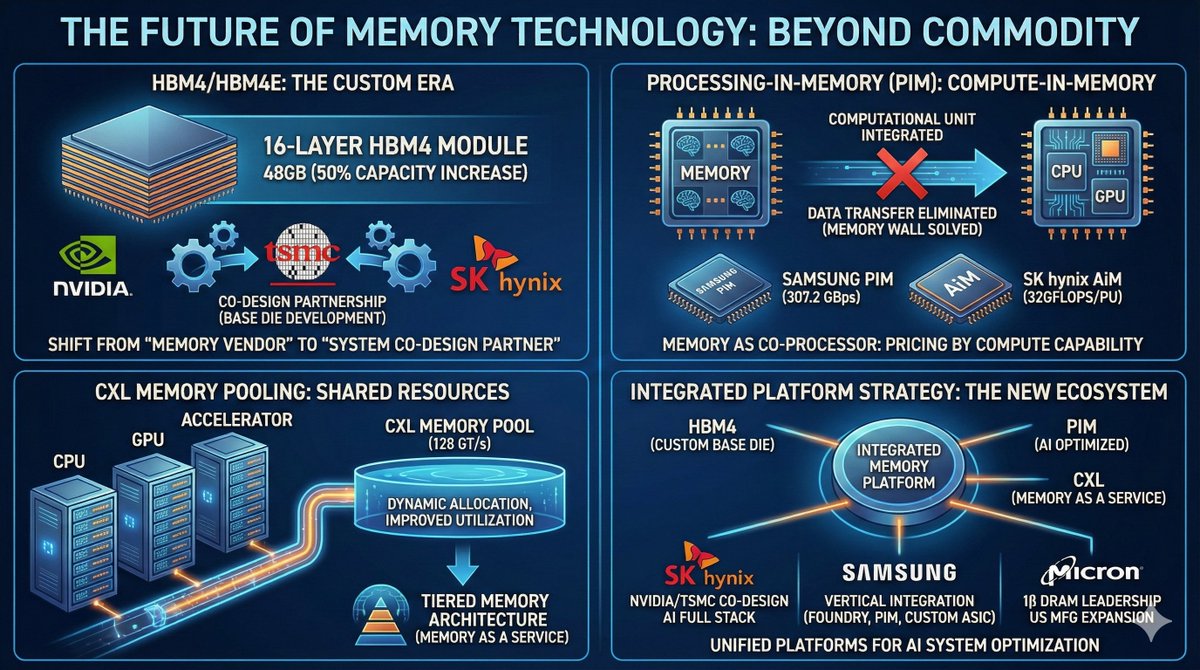

6.1 HBM4/HBM4E

SK Hynix demonstrated the world's first 16-layer HBM4 module at CES 2026. 48GB stack, 50% capacity increase over current HBM3E. SK Hynix is developing next-generation HBM base dies in collaboration with NVIDIA and TSMC. Micron's HBM4 achieves over 2.0 TB/s bandwidth with 1ß DRAM process, 12-high packaging, and MBIST.

Market restructuring effect: Technology leadership and customer partnership become even more important. Identity shifts from "memory company" to "system co-design partner." Companies without custom HBM capability remain in commodity DRAM only.

6.2 Processing-in-Memory

PIM integrates computational units into the memory chip itself, processing directly where data is stored. Eliminates extensive data transfer between memory and CPU/GPU, directly solving the memory wall.

Samsung PIM provides 307.2 GBps bandwidth based on HBM2. SK Hynix's GDDR6-based AiM supports VMM at 32GFLOPS per PU.

Commercialization is still early. Research and prototypes are active, but full proliferation depends on software ecosystem and economics. PIM instruction set, compiler support, and application optimization must mature together.

Market structure impact: Memory evolves from "storage" to "co-processor." New pricing model emerges. Not price per bit but including compute capability. Software ecosystem leadership determines competitiveness.

6.3 CXL Memory Pooling

CXL redefines memory architecture. Turns distributed memory into a unified pool. CPU, GPU, and accelerators dynamically fetch data from the same memory pool. Eliminates redundant data transfer, improves memory utilization.

CXL 4.0 increases bandwidth to 128 GT/s and introduces bundled ports targeting very large connection bandwidth depending on configuration.

Implementation is complex. GPU directly accessing CXL memory pools with coherency is still under various implementation discussions, with significant system design constraints. CPU-side CXL memory expansion and data movement optimization are being discussed to alleviate GPU memory pressure.

Market structure impact: Transition to tiered memory architecture. New product line for memory companies emerges: CXL memory expanders. New collaboration model with hyperscalers: memory as a service.

6.4 Integrated Strategy

The three technologies mentioned above (HBM4, PIM, CXL) don't operate separately. Memory companies are building integrated platforms.

SK Hynix: Unveiled custom HBM, AI-optimized DRAM, and next-generation NAND at SK AI Summit 2025. The core is next-generation HBM base die development and entire AI system co-design through NVIDIA and TSMC collaboration.

Samsung: Vertical integration leveraging their own foundry. Produces HBM4 base dies with in-house processes and expands PIM technology HBM2 integration experience. Targets custom ASIC market with 2026 Google TPU supply.

Micron: 1ß DRAM process leadership and close TSMC collaboration. US production expansion with $6.1 billion CHIPS Act. Differentiates on power efficiency.

Conclusion

For 70 years, the memory business was commodity. But now it's becoming platform. In the AI era, memory is the foundational infrastructure of intelligence and will become a source of value creation itself.

Looking back 10 years from now, won't 2024-2026 be remembered as the "decisive inflection point" of the memory industry?

[my note: I decided to post the whole article on SI in case it ever become inaccessible.]

x.com |